Today, we’re diving into the exciting world of AI music generators, and at the center stage, we have Google’s MusicLM.

These generative AI systems are all about bringing out the creative genius in technology — whipping up original sounds and music across different genres ranging from classical to hip-hop and everything in between.

It’s like having a musical maestro right in your pocket.

In this review, we’re going to take a closer look at Google’s AI-powered, cloud-based music generator which uses machine learning to create audio clips. We’ll discuss how MusicLM works, its pros and cons, and how it could change the way we make music.

Table of Contents:

- What is an AI Music Generator from Text Prompts?

- Can Google AI Create Music?

- What is MusicLM?

- AI Prompts for MusicLM

- Types of AI Music Prompts

- A Comprehensive Review of Google MusicLM

- How to Try Google’s MusicLM

- The Future of AI Music Generators From Text Prompts

- FAQs – AI Music Generator From Text Prompt

- Conclusion

What is an AI Music Generator from Text Prompts?

First, let’s define what is an AI music generator.

An AI music generator from text prompts isn’t just any run-of-the-mill tech tool. It’s the next big thing in the all-powerful music industry.

These apps use artificial intelligence to create unique pieces of melody based on given inputs or prompts. These prompts can be in the form of text descriptions, an image, or an audio snippet.

Automating Music Creation

The arrival of generative AI has revolutionized the way we create music. The use of text prompts simplifies music creation by making it accessible for everyone – including those who may not have any formal training in music.

For instance, platforms like Boomy enable users with no musical experience to create their own songs with just a few clicks.

The best AI music generators use complex deep-learning models trained on large datasets. The data fed into the system typically consists of various musical compositions across genres, styles, and eras.

When you feed lyrics or text prompts into an AI system, it starts generating melodies according to your chosen mood and tone.

Nuances Captured by Text Prompt Music Generators

These aren’t just simple tune makers. A significant aspect of these generative AI elements lies in their ability to capture nuanced emotions embedded within our phrases or sentences.

They comprehend basic moods like happiness or sadness but can also pick up subtleties such as nostalgia through sophisticated sentiment analysis techniques.

This level of understanding allows for more personalized soundtracks tailored specifically to user preferences – whether it’s something uplifting for workout sessions or soothing tracks for meditation practices.

While some critics argue that automation might hamper creativity, many artists find these tools inspiring as they provide new avenues for exploring different styles and sounds without being limited by their technical skills or knowledge about specific genres.

In addition to generating complete compositions from scratch, these algorithms can also help musicians during various stages of the creative process – be it creating guitar solos or composing harmonies for existing melodies.

New Musical Experiences

Music has always been a vital part of our lives, providing comfort, entertainment, and even aiding productivity. With the advent of generative AI tools, we are now witnessing an exciting transformation in sound generation.

A leading player in this arena is Endel, a start-up that uses generative models to create infinite streams of functional music tailored to various cognitive states. This app uses text prompts from its users to create unique, personalized soundscapes for relaxation, focus, and sleep and even reacts to the different times of the day, the weather, your heart rate, and your location.

Screenshot from Endel

The company’s innovative use of text prompts for generative AI tools allows users to influence the creative process by adjusting parameters such as intensity or texture, thereby transforming passive listening into interactive engagement with music.

Taking Music Apart

Music generation is just one part of the equation in the ever-evolving music industry. A longstanding problem in audio work is that of source separation. This involves breaking an audio recording into its separate instruments, a process now made possible with AI-powered source separation tools like Audioshake.

While it’s not yet perfect, this technology has come a long way and holds immense potential for artists.

DJs and mashup artists stand to gain unprecedented control over how they mix and remix tracks through this advanced tool. Audioshake believes that AI will open up new revenue streams for artists who allow their music to be adapted more easily for different mediums such as TV shows or films.

Potential Applications

In terms of applications, AI-driven music generators have immense potential across various domains. The entertainment industry is one obvious sector where its impact could be profound.

These tools can help musicians during the songwriting process, while indie filmmakers might find them useful for creating customized background scores without hiring professional composers every time.

Beyond just entertainment purposes, these generators can also be used in therapeutic fields like mental health counseling where calming audio can be a part of personalized treatment plans.

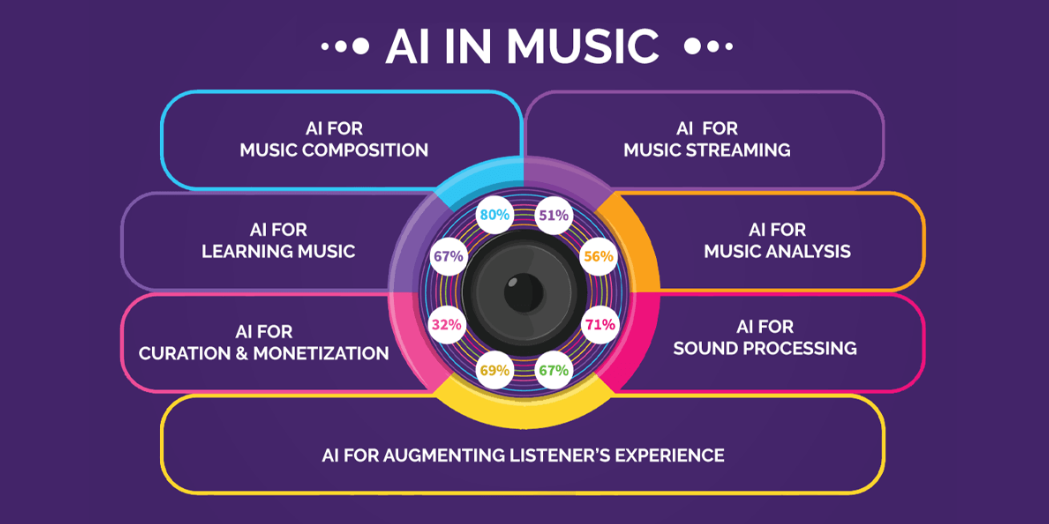

Source: AI World School

Can Google AI Create Music?

Absolutely!

The tech giant Google has recently introduced MusicLM, a groundbreaking generative AI model that can turn text prompts into high-quality music. This revolutionary tool is trained on over 280,000 hours of Western-style music and is capable of producing catchy melodies — and even entire songs — based on text descriptions.

Whether you’re looking for a soulful jazz piece or a pop dance track, all it takes is just one or two sentences to make it happen!

What is MusicLM?

This groundbreaking technology hails from Google’s Magenta project – an initiative dedicated to exploring how machine learning can create art and music.

The goal here was simple: develop an AI system capable of understanding the language of music just like natural language processing works with text prompt generative AI tools.

How does MusicLM work behind the scenes?

At its core lies transformational logic where musical notes are converted into a time-series data format similar to written sentences. This innovative approach enables not only generating new melodies but also harmonizing existing ones by predicting subsequent notes based on prior sequences.

Another impressive feature is the relative timing that distinguishes between note durations rather than absolute timestamps — helping maintain coherence throughout longer compositions.

AI Prompts for MusicLM

An AI music prompt is a starting point or a creative seed that you feed to an AI music generator to “prompt” it to compose music according to your specifications.

AI music prompts act as a catalyst for musical ideas, offering composers, musicians, and enthusiasts a foundation from which they can develop their own unique pieces during the music generation process.

Backed by advanced algorithms and machine learning, AI systems turn text prompts into music by analyzing vast datasets of existing music to understand patterns, structures, and styles. When given a prompt, the AI can generate melodies, chord progressions, rhythms, or even entire musical sections that align with the given criteria.

For instance, you could request an AI to compose a relaxing piano piece with a jazzy vibe. You can then take this output and build upon it, adding your personal touch to create a complete song or composition.

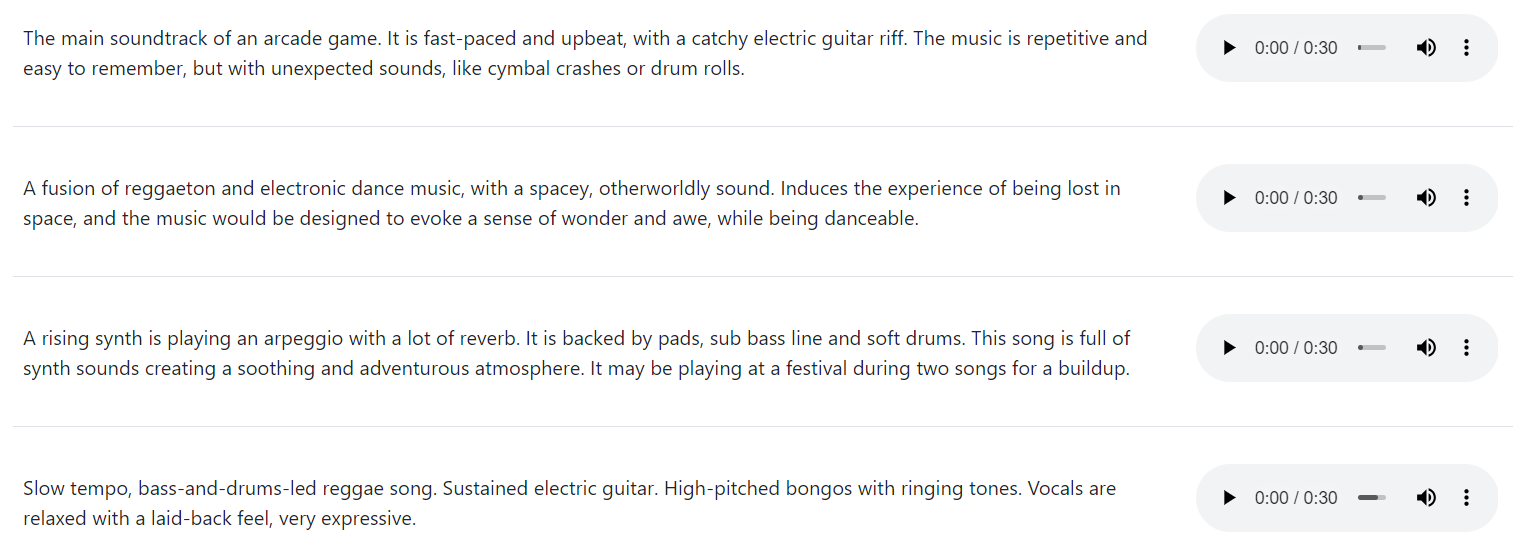

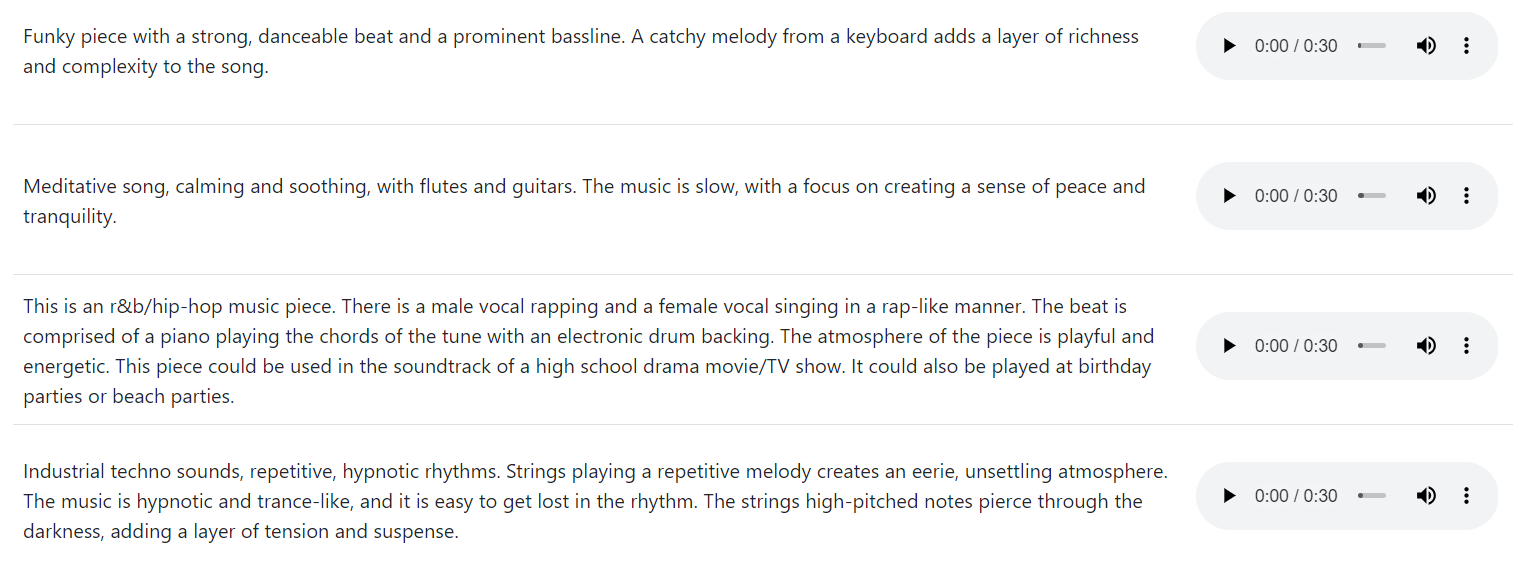

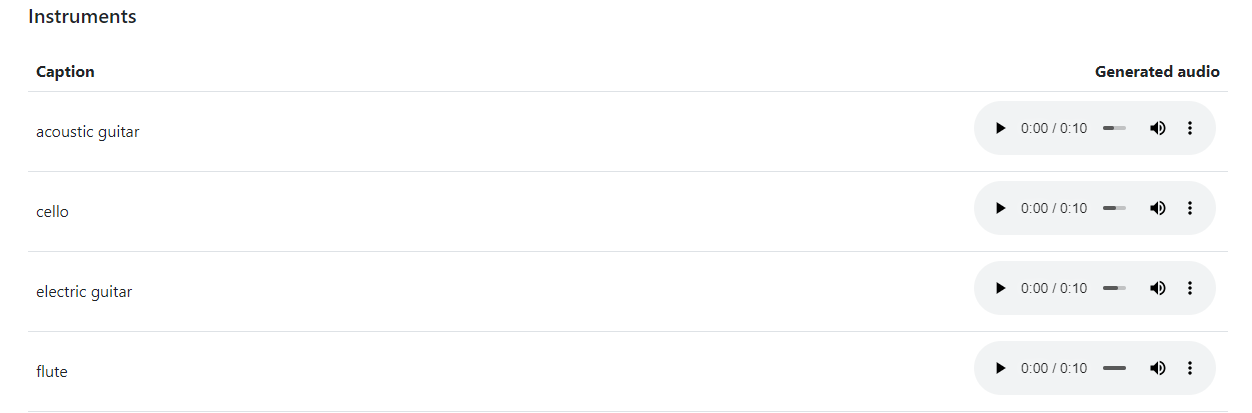

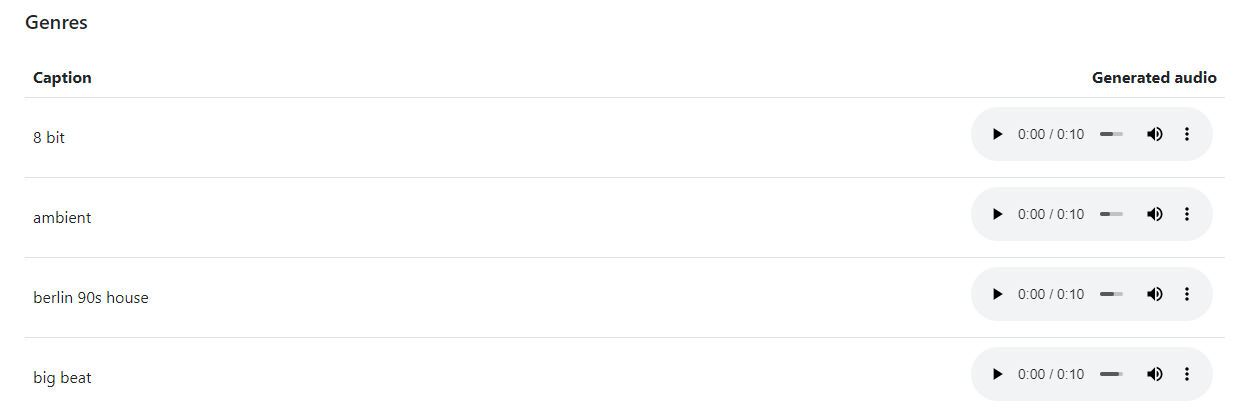

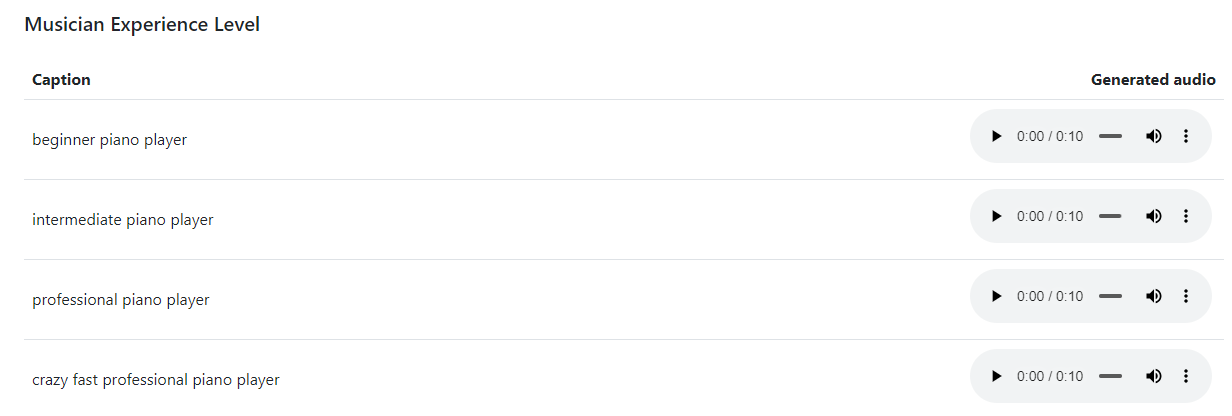

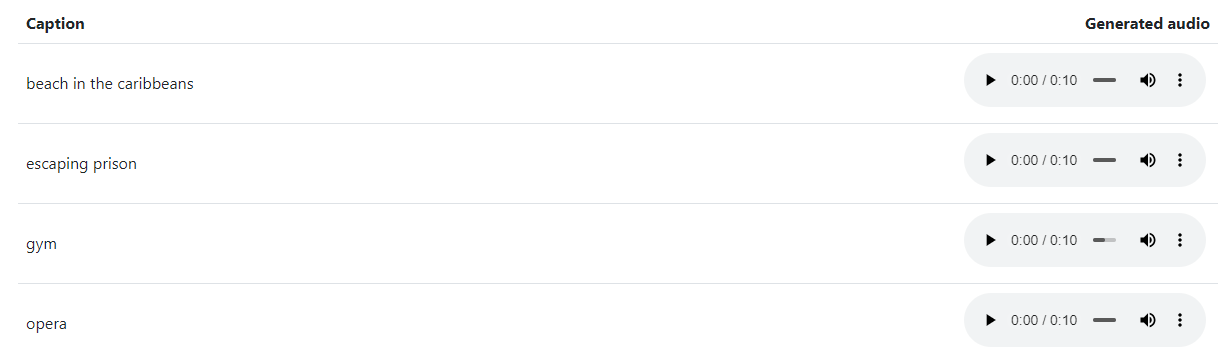

Check out some of the prompts that the Google Research team fed into Google’s MusicLM app:

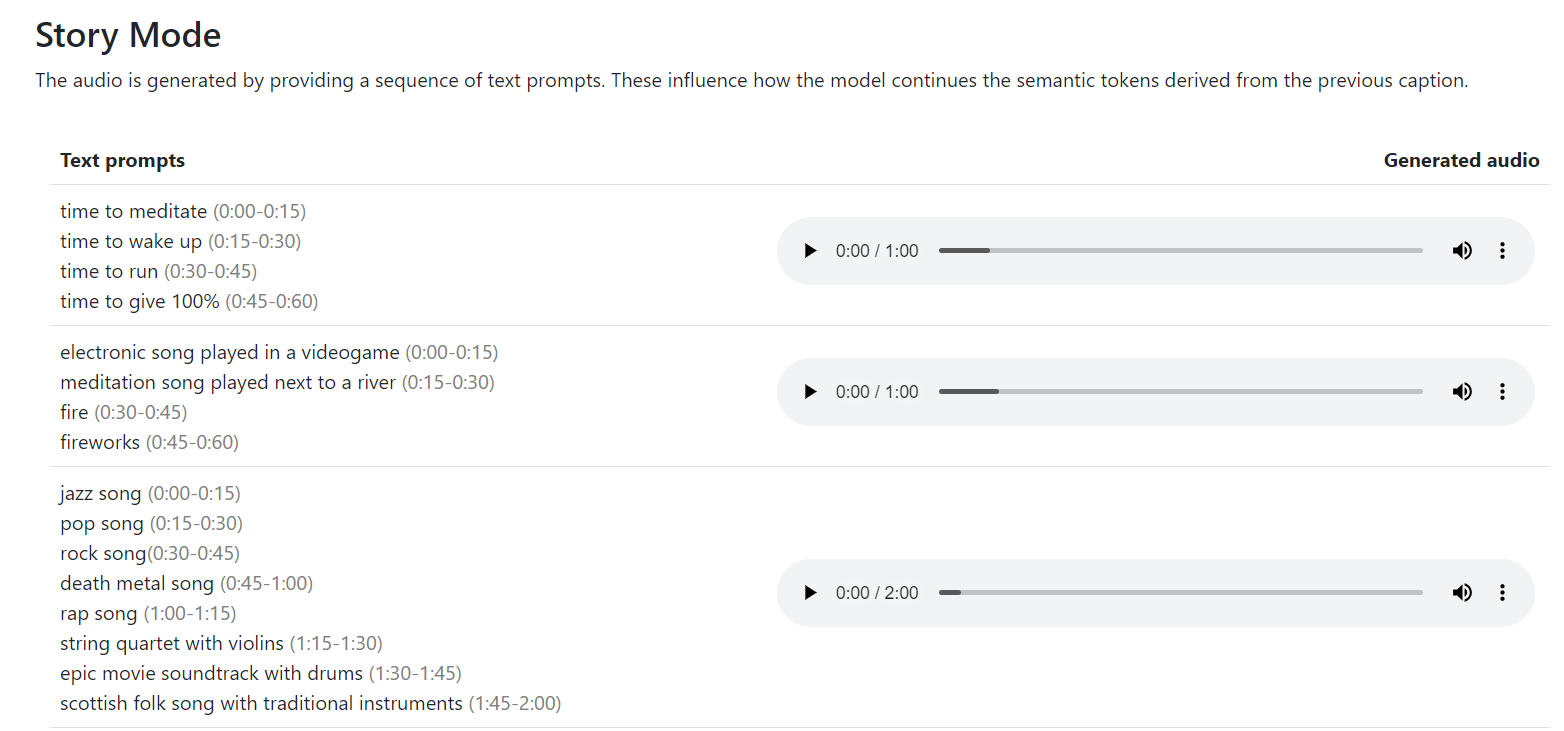

You can even create an entire album by writing a series of AI music prompts as seen below:

Types of AI Music Prompts

There are many ways to compose music with AI prompts.

Genre and Style Descriptions: This is the most common type of prompt where composers describe their desired genre, musical style, or instrument to guide the AI in generating music.

Emotional and Descriptive Prompts: You can enter descriptive text to specify your desired mood, atmosphere, or emotional expression in your AI music. For example, phrases like “serene sunset on a beach” or “intense battle in the rainforest” can inspire AI-generated music with corresponding emotions and settings.

Lyric-Driven Prompts: You can input a few lines or textual themes as prompts for the AI. The music generator will then analyze the text, extract emotional and thematic cues, and generate music that complements the provided lyrics. This way, the music enhances the emotional impact of the lyrics, creating a more immersive musical experience.

Narrative-Based Prompts: AI music prompts can be written as narratives or story outlines. The AI then translates the narrative elements into musical components, creating music that follows the arc of the story. This approach allows composers to create original soundtracks for storytelling, games, or multimedia projects.

Poetic and Metaphorical Language: Prompts with poetic or metaphorical language encourage the AI to interpret the symbolism and generate music that reflects underlying emotions or themes.

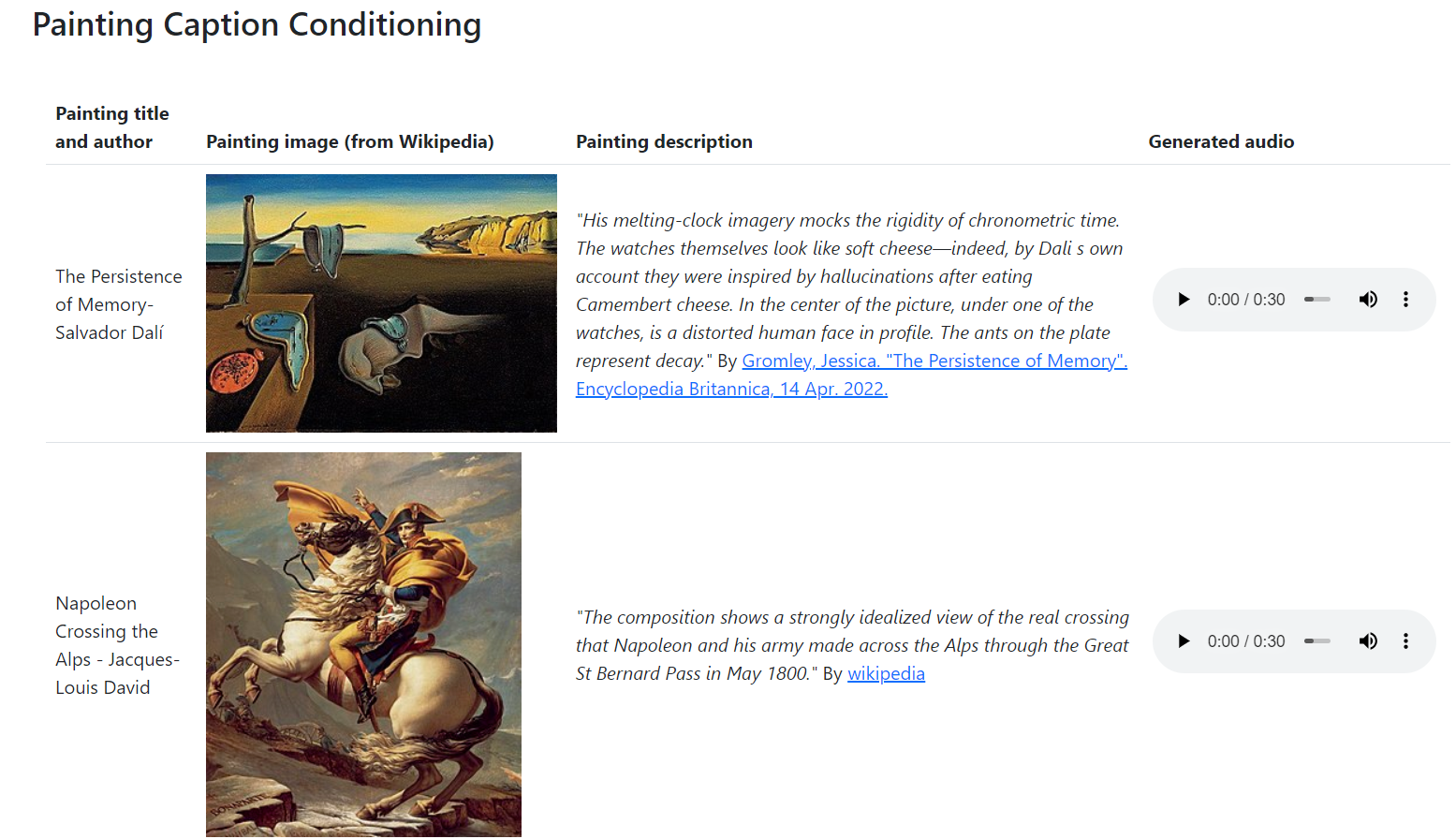

Visual Art: Another way to write prompts is to input descriptions or interpretations of visual art or imagery. The AI then translates these descriptions into musical expressions, creating a unique fusion of the visual and auditory arts. If you’re using Google’s MusicLM, you can even input the actual image for more inspiration!

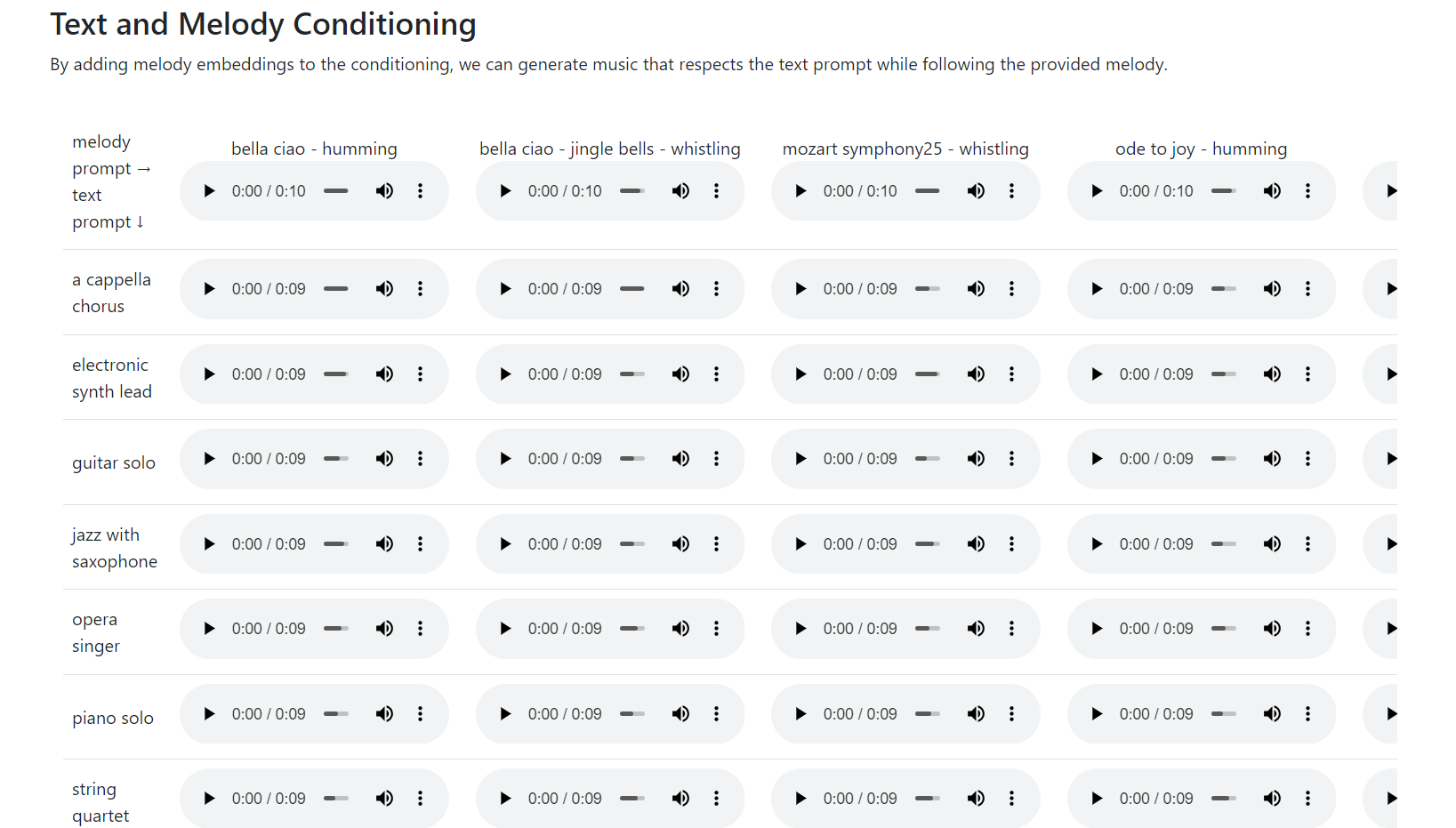

Text + Music: Novel AI models can now interpret text and audio inputs to create expressive musical compositions from both media. Here’s an example of how Google’s MusicLM does it:

AI music prompts not only serve as a source of inspiration but also as a tool to break creative blocks and explore musical territories that one might not have considered before. They offer a new and exciting way for composers to collaborate with technology, making the creative process even more dynamic and intriguing for musicians of all levels.

A Comprehensive Review of Google MusicLM

Google’s MusicLM generative AI has redefined the boundaries of music composition. Leveraging cutting-edge advancements in deep learning and natural language processing, this innovative AI system is designed to compose original music pieces from text prompts.

Turning a text prompt like “soulful jazz” into a musical track is the ultimate goal of AI music. Similar to how DALL-E and Midjourney have revolutionized image generation, Google MusicLM is supposed to offer a creative tool for musicians and even those who do not have any knowledge of melody and beat.

Let’s look at MusicLM’s capabilities, strengths, and potential limitations.

But first, the pros and cons.

Pros

- Generates royalty-free audio samples for free (currently in beta mode).

- Produces an infinite amount of clips.

- Strange but interesting experimental results.

Cons

- Not adept at following specific instructions.

- Low-fidelity audio quality.

- Garbled musical ideas.

- Doesn’t follow beats-per-minute accurately.

Turn Text Prompts to Music

Google’s experimental AI model is designed to transform your text prompts into unique audio clips. This advanced generative AI tool offers a new way of creating music, making it accessible not only to professional musicians but also to music amateurs.

The process is straightforward: Users simply type in a prompt like “soulful jazz with melodic techno” or “pop dance track featuring catchy melodies.” The AI then generates two versions of the requested song, which users can vote on to help improve the model’s performance.

In addition, this innovative technology allows melody conditioning, transforming whistled and hummed tunes into different styles based on user input.

Training Data

To ensure a diverse output, Google trained its generative models on 5 million audio clips (equivalent to 280,000 hours) along with another dataset comprising 5,500 clips described by actual musicians. These descriptions cover various aspects such as genre, mood, and instrument types.

Technology

This machine-learning model is all about patterns. It uses transformer models that are designed specifically for understanding relationships within data sets.

In layman’s terms? The system takes in both long-term and short-term dependencies from your input data (that could be anything from musical notes to rhythm), processes them through its neural network brain, and spits out complex compositions with coherent structures.

Now you have original pieces that don’t sound jumbled or disjointed – which is quite impressive.

User Experience

According to Google, MusicLM can understand text and image prompts relating to genre, mood, and type of instrument. It can emulate the style of specific musicians and create music that’s appropriate for a given context, like studying or working out.

This makes it a turbocharged audio generator that can produce an endless amount of royalty-free samples for musicians to add to their tracks.

If you’re looking for specific audio loops to use in your project, you no longer need to comb through thousands of clips to find what you’re looking for. Thanks to an AI music generator by prompt, you can simply instruct the machine to compose the exact kind of music you want.

So what does MusicLM actually sound like?

First, we tried creating drum sounds at 130 beats per minute. Although the AI failed to match the tempo, the output was pretty solid. When we added “crash cymbals” to the prompt, the vibe turned techno although the sound of the cymbals was hardly noticeable.

For the next test, we asked the AI to generate a song in the style of Rihanna. MusicLM is designed to NOT imitate existing artists to avoid copyright issues, so don’t expect to get real vocal hooks here.

We experimented with different prompts for solo performances (solo synthesizer) and ensembles (string quartet). For the synthesizer prompt, MusicLM created an electronic track that included a bass guitar and drums instead of just the synthesizer alone.

Finally, we tested its ability to create non-Western-style music by entering a prompt for an Indian sitar. MusicLM did produce two clips with a sitar playing in the mix.

Although MusicLM did not accurately deliver what we had wanted from each prompt, it did create some unfamiliar but interesting sounds and textures. In this way, MusicLM works as a random audio generator so you don’t have to comb through hundreds of albums to find audio snippets for your track.

With its overall unimpressive output, MusicLM isn’t replacing musicians, sound designers, producers, and sample libraries anytime soon. But it is an intriguing piece of technology that could push boundaries in sound creation.

How to Try Google’s MusicLM

If you want to try Google’s MusicLM, you have to sign up for the waitlist at Google’s AI Test Kitchen.

Once approved, you can start typing prompts in the text box on the web app. This can range from a few words to a few sentences. Be sure to describe in detail what kind of music you want the app to create, adding a specific mood and emotion.

After pressing enter, the app will start composing your tune. Within a few seconds, you’ll get two 20-second audio snippets. Listen to both tracks and choose the best sample that matches your prompt. This will help Google train its model and improve its output.

Imagine being able to turn phrases like “ambient, soft music for studying” or “catchy melodies with a melodic techno vibe” into actual tracks! The advanced controls offered by MusicLM allow even complete music amateurs to generate their requested song from a million audio clips using melody conditioning techniques.

From creating catchy guitar solos for your next performance, experimenting with new soundscapes in your studio production, or simply enjoying personalized background tracks while working – the possibilities are endless!

Becoming an early tester means contributing towards improving this fascinating technology as well. As testers provide feedback on generated tracks via the trophy awards system, it helps refine algorithm accuracy over time, thus making future iterations more effective.

The Future of AI Music Generators From Text Prompts

We’re looking at a future where text prompts generative AI tools could be instrumental in reshaping how we create music. The potential applications and developments are simply too exciting to ignore.

Imagine this: Songwriters using AI as their personal music generators based on lyrics they’ve written themselves. It’s like having your own team to take care of all the technical stuff while you focus purely on creativity.

Beyond individual artists, businesses stand to gain significantly from this technology too. Restaurants or retail stores can create custom background music tailored specifically for their environment – now that would elevate customer experience!

Trends and Developments

In recent times, there has been an uptick in collaborations between human musicians and AI systems leading to unique compositions beyond traditional musical structures – kind of like hearing AI-generated songs with a twist.

A noteworthy trend is adaptive soundtracks for video games created by algorithms that adjust game scores dynamically according to player actions – talk about immersive gaming experiences!

Challenges Ahead

No doubt the possibilities seem endless, but it’s not going to be smooth sailing.

Ethical considerations around authorship rights need careful thought. Who gets credit when instrument styles come from an algorithm?

And then there’s quality control. The lack of human touchpoints during the creation process can impact overall output quality.

All said though, one thing remains certain – the future holds interesting prospects for our interaction with an AI music generator from text prompts.

FAQs – AI Music Generator From Text Prompt

Which AI generates music from prompts?

Google’s MusicLM is a prime example of an AI that generates music from text prompts, revolutionizing the process of music creation.

Is there an AI that can generate music?

Absolutely. There are several AIs capable of generating music, such as OpenAI’s MuseNet and Google’s Magenta project, including its latest addition, MusicLM.

How does an AI music generator work?

Similar to AI image generators and text-based AI tools, AI music generators utilize deep learning.

Deep learning is a machine learning technique that enables computers to process data in a manner that emulates the human mind.

What is the AI tool to convert text to song?

The most notable tool for converting text into a song is Lyrebird by Descript, which leverages advanced speech synthesis technology for this purpose.

Conclusion

AI music generator from text prompts is a game-changer in the realm of music creation.

No longer are we bound by traditional constraints and methods.

This technology, especially Google’s MusicLM, has shown us that AI can indeed create beautiful and complex melodies out of written descriptions.

The future holds exciting possibilities as these AI generators continue to evolve — innovation meets creativity in an unparalleled symphony!

Stay one step ahead with WorkMind’s blogs, crafted to deliver real results for students and professionals. See what we have in store for you.