Have you ever wondered where ChatGPT is pulling its wealth of information when conversing with you? You’re not alone. Many users are intrigued by the seemingly endless stream of knowledge that flows from this AI, sparking curiosity about its data sources.

Where does ChatGPT get its data exactly?

In our latest blog post, we’ll peel back the layers of this large language model to reveal the nuts and bolts of its informational framework.

We’ll explore how vast datasets serve as the bedrock for ChatGPT’s responses and discuss what makes it such a powerful tool for generating human-like text.

So sit tight as we look into where ChatGPT gets its data and how to address its limitations.

Table Of Contents:

- The Architecture Behind ChatGPT’s Brain

- ChatGPT’s Extensive Training Data Universe

- Where Does ChatGPT Get Its Data?

- How ChatGPT Learns from Human Interactions

- The Role of Wikipedia and Web Content in Training ChatGPT

- Limitations and Challenges of ChatGPT

- FAQs – Where Does Chatgpt Get Its Data

- Conclusion

The Architecture Behind ChatGPT’s Brain

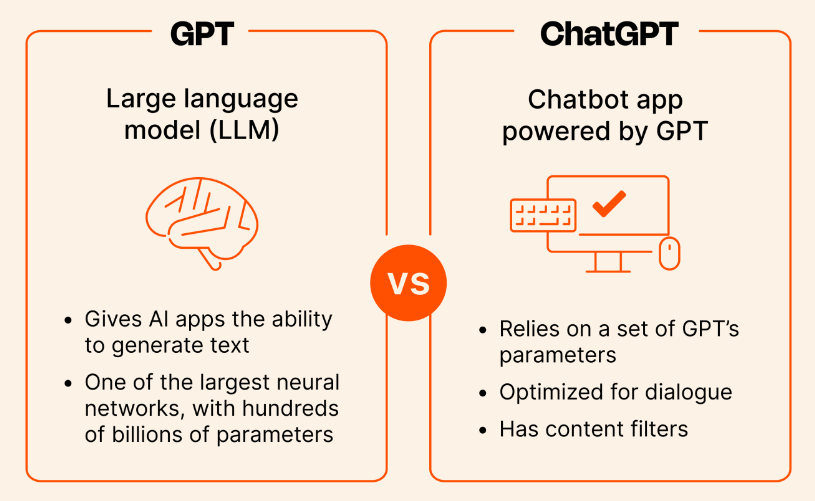

Peek under the hood of ChatGPT and you’ll find a groundbreaking AI known as the generative pre-trained transformer, or GPT model.

This architecture powers systems like ChatGPT to grasp and spit out text that feels pretty darn human.

GPT is like a virtual librarian with an extensive collection of books in its head. Imagine you could ask this librarian any question on any topic and they would write it out for you using bits from all the different books they’ve read.

ChatGPT has read a vast amount of text from the internet — everything from news articles to social media posts before April 2023. From this information, it generates new pieces of writing that can answer questions, create stories, or even help with tasks. It doesn’t just spit back what it’s read; instead, it mixes up everything it knows to come up with something fresh and relevant each time someone asks for something.

Image Source: Zapier

ChatGPT’s Extensive Training Data Universe

Digging into every corner of knowledge available online, ChatGPT has amassed an eclectic mix from classic literature to trendy blog posts. This wide variety ensures it can chat about almost anything you throw at it with general knowledge that seems boundless.

We’re not talking about surface-level stuff here; this AI tool goes deep. It gets its chops from everything published before its cutoff date – including informative Wikipedia articles and diverse public webpages that offer real-world context crucial for generating coherent responses.

To put numbers behind words: there are large amounts of varied texts making up its DNA so users like you can have conversations spanning Shakespeare to quantum physics without missing a beat.

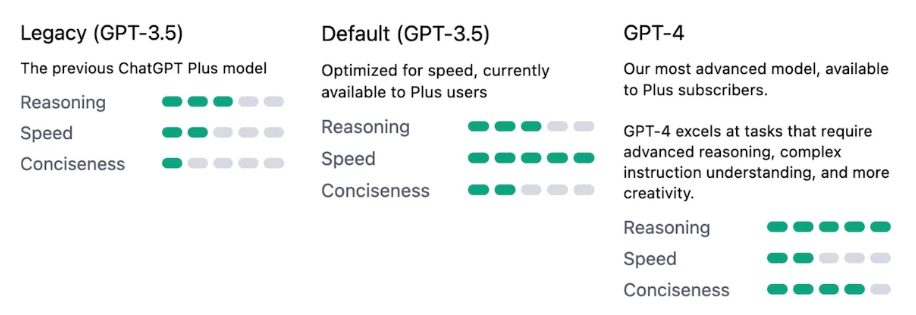

Image Source: Synthedia

Where Does ChatGPT Get Its Data?

ChatGPT data comes from a diverse range of sources on the internet, including:

- Books: Excerpts and text from a wide array of books, covering different genres, topics, and languages.

- Social Media: Posts, comments, and discussions from various social media platforms like Twitter, Facebook, etc.

- Wikipedia: Articles and content from the multilingual encyclopedia Wikipedia, which covers a vast range of topics.

- News Articles: News articles from diverse news sources and outlets, providing information on current events and historical context.

- Speech and Audio Recordings: Transcripts of spoken language and possibly audio data that have been converted into text.

- Academic Research Papers: Text from scientific and academic journals, publications, and research papers across various disciplines.

- Websites: Content from websites across the internet, including blogs, company websites, and other online sources.

- Forums: Discussions and conversations from online forums and message boards like Reddit and Quora.

- Code Repositories: Text and code snippets from online code repositories like GitHub.

ChatGPT’s training data encompasses a broad spectrum of text to make it versatile and capable of providing information on a wide range of topics and subjects. The exact distribution and proportion of data from each source are not disclosed to maintain privacy and copyright compliance.

OpenAI trained the ChatGPT model on a mixture of licensed data, data created by human trainers, and publicly available text from the web. This training came in two phases:

1. Pretraining: In this phase, a language model is trained on a large corpus of publicly available text from the internet. The specific details about the data sources, volume, or the exact documents used for pretraining are not disclosed in public information to prevent overfitting and misuse.

2. Fine-tuning: After pretraining, the model is fine-tuned on custom datasets created by OpenAI. These datasets include demonstrations of correct behavior and comparisons to rank different responses. Some of the prompts used for fine-tuning may come from user interactions on platforms like ChatGPT, with personal data and personally identifiable information (PII) removed.

How ChatGPT Learns from Human Interactions

ChatGPT gets smarter through a process that’s kind of like learning how to ride a bike.

Reinforcement learning lets it adjust its responses, just as you’d change your balance based on tips from those who’ve done it before.

This feedback loop is key for fine-tuning the way ChatGPT talks to us. Think about when someone corrects your pronunciation — it’s like that but for an AI model.

A group of trainers guides this machine-learning marvel, nudging it towards answers that are not just accurate but also helpful and relevant. It’s teamwork at its finest: human intelligence combines with artificial intelligence, leading to responses that feel more natural and less robotic.

The secret sauce? Trainers assess quality, which teaches the generative pre-trained transformer architecture behind ChatGPT how to answer questions better next time around.

The Role of Wikipedia and Web Content in Training ChatGPT

Imagine tapping into the world’s biggest encyclopedia for a school project. That’s kind of what ChatGPT does with Wikipedia articles during its training.

With such extensive coverage on a kaleidoscope of topics, it’s no wonder that these pieces are a go-to source to fill its knowledge tank.

But here’s where things get even spicier: public webpages come into play too, giving our AI buddy real-world context— like seasoning adding flavor to food.

Tapping Into the Encyclopedia of the Web

We’re talking about an expansive database at ChatGPT’s fingertips that ensures this virtual assistant isn’t just book-smart but street-wise as well.

This means when you ask it something, it pulls from vast experiences — not unlike how we humans learn from everything around us.

Public Webpages as Learning Material for AI

Beyond just facts and figures, learning from various online sources allows ChatGPT to understand nuance and deliver responses that resonate more deeply with us humans. It’s like having conversations across different cultures — it gets better by experiencing diversity.

Further Reading: How Does ChatGPT Work: A Step-by-Step Breakdown.

Limitations and Challenges of ChatGPT

ChatGPT can be a bit of a double-edged sword.

On one hand, it has this impressive ability to generate human-like responses, but on the flip side, it might also provide something factually incorrect or biased.

This is where OpenAI steps in with some nifty AI safety measures.

Navigating Misinformation Challenges

Misinformation can spread like wildfire when not kept in check.

To keep things factual, OpenAI works tirelessly behind the scenes to refine its strategies for filtering out any misleading information that could slip through ChatGPT’s cracks.

Read about OpenAI’s safety policy.

Mitigating Societal Biases

We all know biases are no good; they’re like unwanted guests at a party.

The team behind ChatGPT delves into heaps of data and keeps tweaking its algorithms so that what comes out doesn’t lean unfairly one way or another.

For more about these ongoing efforts, take a look at how OpenAI is mitigating biases in machine learning models.

FAQs – Where Does Chatgpt Get Its Data

Where does ChatGPT get information from?

ChatGPT pulls its info from a massive pool of internet text, including books and websites, up until 2023.

What is ChatGPT trained on?

It’s schooled on an eclectic mix: everything from literary classics to the latest online articles pre-2023.

How accurate is the data in ChatGPT?

Data accuracy varies; it mirrors human knowledge as of 2023 but can stumble with real-time facts.

How does OpenAI get its data?

OpenAI sources training data widely, harvesting texts across genres and domains before processing them for AI use.

Conclusion

Peering into ChatGPT’s brain, you’ve seen where it pulls its smarts from. It learns like we do — by soaking up books, websites, and real-world chat logs.

Remember: large language models like ChatGPT are only as good as the data behind them. That’s why knowing where ChatGPT gets its data matters.

Feedback shapes us; it shapes AI too. Your conversations with ChatGPT fine-tune its wits, making each exchange better than the last.

Tread carefully though. With every word it spins out, there lies a responsibility to guide AI away from biases and misinformation pitfalls that could trip us all up.

If you’re worried about data privacy, there are ChatGPT alternatives like BrandWell that can guarantee no personal information of yours will be shared with the world. Try it today!