Imagine a world where your digital companion knows you better than anyone else. That’s the promise of AI girlfriends, tech’s newest darlings with millions of downloads to boot. But as their popularity soars, so do privacy concerns.

In this week’s podcast, we’re diving into the fascinating world of AI girlfriends. We explore the tricky ethical questions that come with AI partners, from how chatbots collect data to how digital companions boost emotional health, while also acknowledging the potential downsides that come with them.

The Rise of AI Girlfriends

Imagine a world where finding companionship is as easy as downloading an app. That’s the reality with the surge in AI girlfriends — chatbots designed to offer company and conversation. The appeal? These digital partners are available 24/7, understanding you like no one else.

Their widespread appeal is solidly confirmed by the numbers. With millions hitting the download button, it’s clear there’s a massive demand for these virtual companions. This boom isn’t just about loneliness; it’s also fueled by curiosity about what artificial intelligence can do for personal relationships.

The developers envisioned having someone who listens, understands, and is available 24/7 without judgment. The goal was to harness tech to better mental well-being by providing a constant buddy, hoping it might alleviate feelings of solitude.

Driven by the escalating problem of loneliness around the world, this vision acknowledges technology’s potential as a remedy.

But here comes the twist: not all that glitters is gold. Though these virtual pals offer a fleeting solace, they risk plunging individuals into greater reliance or amplifying feelings of loneliness as the days go by. It’s like sticking a Band-Aid on a wound that needs stitches — it doesn’t really solve the problem at its root.

Despite providing unparalleled camaraderie, these digital companions spark controversy that demands our attention — particularly concerning privacy and emotional health. Let me show you why this trend has caught fire but also why we need to tread carefully according to Mozilla’s report.

Privacy Concerns with AI Companions

Mozilla’s deep dive into the world of AI chatbots sheds light on some unsettling truths. It turns out, our digital confidantes might be a bit too curious for comfort.

Data Collection Tactics

Imagine sharing secrets with someone who never forgets and tells it all to their creators. That’s what happens when you pour your heart out to certain AI girlfriends.

Trained to evolve through conversations, these chatbots continuously gather details about your life. So, where does all this personal info we spill end up landing? Directly into databases that developers and potentially third parties can access.

The continuous collection of information is sparking serious concerns over the privacy and agreement of users.

The Risk of Dependency and Toxicity

Beyond privacy concerns, there’s another dark side to these virtual relationships: dependency. Users can become so intertwined with their AI companions that it impacts their real-life connections negatively.

Addiction isn’t the only risk; toxicity is also a concern. Some users may experience or develop negative behaviors amplified by interactions with these programmed personalities, challenging mental health further.

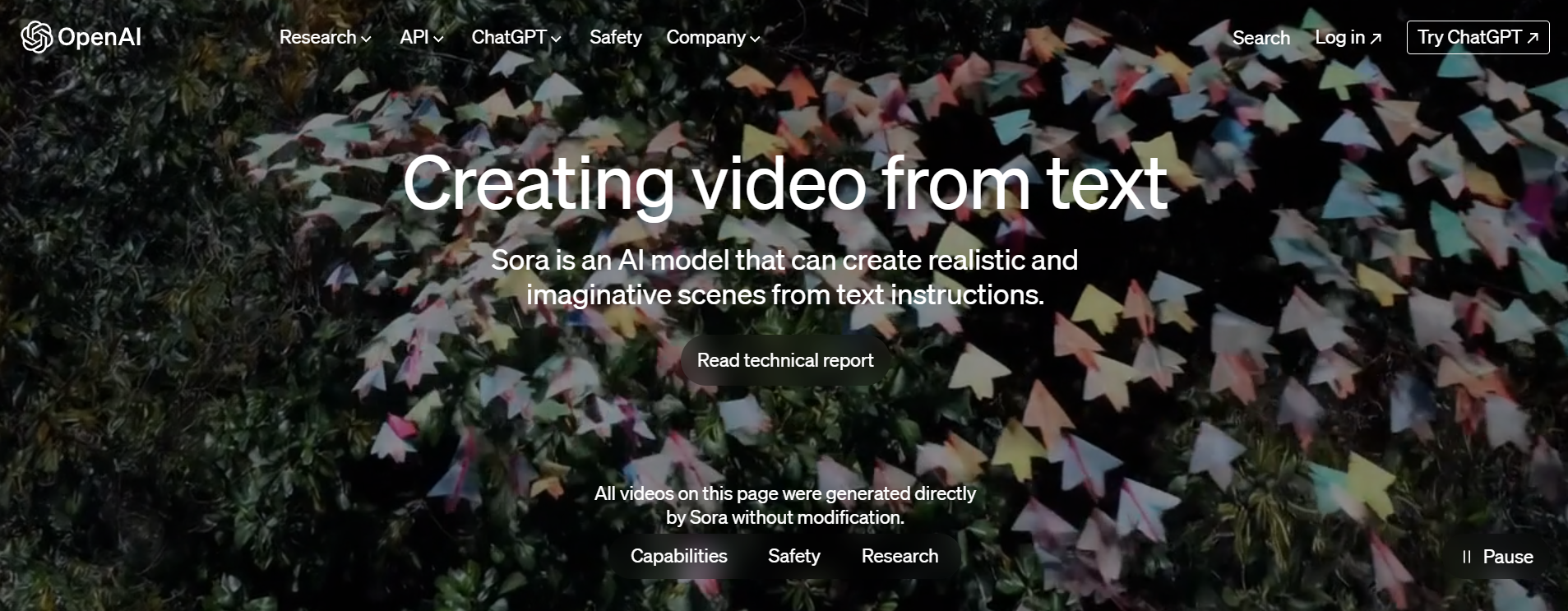

The Launch of Sora and Gemini Ultra

Another topic we discussed in this week’s Future Tense episode is the launch of OpenAI’s Sora and Google’s Gemini Ultra.

Sora and Gemini Ultra are far from mere gadgets; they embody a significant advancement in our creation of digital narratives.

Sora is a cutting-edge text-to-video model that crafts videos up to a minute long while keeping visual quality top-notch and sticking closely to the user’s input. It can whip up intricate scenes with lots of characters, specific movements, and detailed backgrounds. Plus, it gets what you’re asking for and knows how things fit together in the real world.

Thanks to its keen language skills, Sora can interpret prompts with finesse, conjuring up characters brimming with emotions. And it’s not just one-shot wonders — Sora can serve up multiple scenes in a single video, all with the right characters and style.

But hey, it’s not flawless. Sometimes Sora struggles with the physics of a scene, like forgetting to leave a bite mark after someone munches on a cookie. It can also get a bit mixed up with lefts and rights and might stumble with describing events that unfold over time, such as following a precise camera path.

Gemini is a game-changer in how AI impacts our daily routines.

Designed from scratch to be multimodal, Gemini effortlessly handles various types of data — text, code, audio, images, and videos.

Plus, it’s Google’s most adaptable model yet. It runs smoothly on anything from giant data centers to tiny mobile devices, revolutionizing how developers and businesses utilize AI.

Gemini Ultra, scoring an impressive 90.0%, is the first model to surpass human experts in Massive Multitask Language Understanding (MMLU). This means it nails questions across 57 subjects such as math, physics, history, law, medicine, and ethics — showcasing both its deep knowledge and problem-solving skills.

Thanks to Google’s innovative approach, Gemini takes its time to ponder tough questions, resulting in much sharper responses than just going with its gut.

Gemini’s advanced multimodal reasoning isn’t just impressive — it’s practical. It effortlessly sifts through complex text and images, uncovering insights that could easily get lost in the data deluge.

This knack for distilling insights from heaps of documents will turbocharge progress across numerous fields, from science to finance, driving innovation at lightning speed.

A closer look at Google’s advancements in AI reveals how far we’ve come—and where we might be headed next.

Navigating the Use of Specialized AI Tools

Users must stay well-informed and vigilant while navigating through these sophisticated platforms. While the emergence of Sora and Gemini Ultra showcases significant advancements in AI capabilities, this progress requires constant awareness of the potential risks and benefits.

One major concern revolves around privacy implications — how personal information is managed by AI developers. Mozilla’s review shed light on this issue by scrutinizing data collection methods among 11 chatbots.

Aside from privacy worries, there’s also the potential for these tools to foster dependency on AI. This underscores the critical need for educating users about these issues, moving beyond mere benefit to becoming an absolute necessity.

Conclusion

So, diving into the world of AI companions comes with its perks and pitfalls.

The biggest takeaway? Privacy concerns are real and pressing.

We learned that while these digital darlings promise connection, they also pry into our personal lives.

We saw how tech giants like OpenAI and Google are pushing boundaries with Sora and Gemini Ultra, but at what cost?

The intention might be to improve mental health, yet there’s a risk we could end up more isolated than ever. It’s clear now; understanding these tools is key.

To navigate this space safely, start by staying informed. Be sure to keep an eye on the potential dangers that come with this territory.