So, you’ve been using ChatGPT, OpenAI’s conversational AI. You’re amazed at how ChatGPT works — generating human-like text and responding to your queries in a snap.

You’re hooked! It’s like having an assistant that never sleeps. But then, late one night while sipping on your cup of tea, a thought pops into your head:

“Is ChatGPT safe?“

In this article, we will address that question. People have real and valid concerns about data privacy and security and we are going to cover all the details of these issues.

Table of Contents

- Is ChatGPT Safe? Decoding the Safety of AI Chatbots

- Data Handling and Protection in ChatGPT

- When Is It Safe (and Not Safe) to Use AI Chatbots?

- Security Measures in ChatGPT

- Guarding Your Privacy with ChatGPT: The Lowdown

- ChatGPT: Your Guide to Ethical Use

- OpenAI’s Safety and Security Strategy: Slow and Steady

Is ChatGPT Safe? Decoding the Safety of AI Chatbots

ChatGPT is generally safe to use for common questions and general needs. But, we do not recommend using it with personal or proprietary business information.

Whether you are using ChatGPT-4, Gemini, Perplexity AI, or any other ChatGPT alternative – you should be cautious of what data you are putting into your prompts.

In this next section, we will cover some of the concerns.

Risks of Conversational AI

I’ve outlined a 4-point framework to classify ChatGPT security risks.

Here are the scenarios where ChatGPT could be employed to aid a cybercriminal:

Risk #1: Data Theft: When it comes to data breaches, we’re talking about the unauthorized access and sneaky snatching of confidential information on a network. This could be your personal info, passwords, or even top-secret software codes. All this crucial information could end up in the hands of hackers, ready to unleash mayhem through things like ransomware attacks. Even something simple like creating a resume with ChatGPT, you may want to omit your actual name and address and identifiable information. Use filler content for those more personal details.

Risk #2: Phishing Emails: Picture this – you get an email that looks totally legit, but it’s actually a trap! That’s called phishing. Cybercriminals whip up these crafty ChatGPT emails to fool you into doing their bidding. This could mean clicking on sketchy links, opening suspicious attachments, giving away sensitive info, or even wiring money to their secret accounts.

Risk #3: Malware: Malware is the big bad wolf of the digital world. It’s the umbrella term for all sorts of nasty software that’s out to get you. Malware’s mission? Sneak into your private servers, swipe your precious info, or just wreck your data for fun. It’s the ultimate troublemaker.

Risk #4: Botnets: Imagine a cyber army under the command of a hacker – that’s a botnet. These sneaky attackers infiltrate a bunch of Internet-connected devices and infect them with malicious software. It’s like a robot network gone rogue. The hacker’s goal? To seize control of this army for all sorts of shady activities.

ChatGPT as a Hacking Tool

This powerful chatbot can craft convincing documents and even phishing emails, posing a risk to unsuspecting individuals who may unknowingly reveal sensitive information.

Furthermore, the learning capabilities of ChatGPT have raised some eyebrows. Malicious individuals could exploit this tool to acquire programming skills and gain knowledge about network infrastructure, potentially leading to cyberattacks.

An alarming example that highlights these concerns was shared on Twitter. A user posted detailed instructions, generated by GPT-4, on how to hack a computer. This emphasizes the need for robust safety measures when utilizing such advanced AI tools.

Another area where ChatGPT’s abilities could be misused is code generation. By providing plain English requests, anyone can generate program codes using this AI system. The introduction of new plug-ins in ChatGPT has made it capable of running self-generated code as well.

These possibilities raise questions about the safe usage of ChatGPT and emphasize the importance of responsible handling when utilizing advanced technology platforms.

ChatGPT Scams

OpenAI regularly releases updates that expand ChatGPT’s capabilities, some of which require membership for limited accessibility. This environment creates opportunities for scammers to offer free, high-speed, and advanced features.

However, it’s crucial to remember that if something sounds too good to be true, it likely is.

Don’t fall for these ChatGPT scams. Exercise caution when receiving ChatGPT offers via email or social media platforms. For accurate information, refer directly to trusted sources like OpenAI’s official website.

Fake ChatGPT Apps

When it comes to using AI, particularly in the form of chatbots like ChatGPT, one key aspect of safety is steering clear from counterfeit versions of these apps.

The official app for ChatGPT currently exists only on iPhones. This means any application purporting to be a downloadable version for Android is undoubtedly fraudulent. These phony applications are unfortunately plentiful both on Android and iOS platforms, often masquerading as genuine products.

Most fake apps try to entice users into paying for the download while others have more sinister intentions such as data theft or introducing malware onto your device. Some unscrupulous creators design these sham apps with the sole aim of harvesting user data which they then sell off to third parties without consent.

Besides dodgy mobile applications, several websites also misuse the term “ChatGPT.” For instance, they may incorporate it within their domain names in an attempt to appear legitimate. However, you can easily identify this misuse with one simple check: if they offer ChatGPT as a downloadable software, you can safely conclude that it’s not authentic.

Your best bet against falling prey to such scams lies in staying informed about how phishing scams work and always ensuring that you’re using software from trusted sources only.

In this digital age where everything seems possible with just a click away, safety should never take a backseat. ChatGPT, as one of the first publicly available AIs with impressive language skills, serves as a reminder of the challenges and successes associated with AI technology.

It’s important to be mindful of the risks, such as hacking threats and misuse possibilities, that come with new technologies. Therefore, it’s essential to use online services cautiously and prioritize the protection of your personal data.

Check out our guide on how to use ChatGPT properly and safely.

Data Handling and Protection in ChatGPT

ChatGPT, developed by OpenAI, prioritizes user data collection and protection. It focuses on safeguarding personal data in compliance with regulations such as the California Consumer Privacy Act (CCPA) and the General Data Protection Regulation (GDPR) of the European Union.

Let’s delve into how ChatGPT ensures data handling and protection.

The Privacy Policy of OpenAI: What You Need to Know

First and foremost, it is crucial to understand OpenAI’s privacy policy.

OpenAI only discloses information to external entities, such as vendors or law enforcement organizations, when it is absolutely necessary.

Confidentiality is a top priority. OpenAI ensures that personal data remains private and protected at all times, preventing unauthorized disclosure.

However, incidents can happen, even in AI systems. On March 20, 2023, ChatGPT users reported seeing other people’s chat history, potentially exposing payment-related information. However, OpenAI promptly responded by enhancing security measures to ensure robust data privacy.

While no system can guarantee complete immunity against evolving cybersecurity threats, OpenAI is dedicated to continuous improvement.

OpenAI remains proactive in mitigating risks and strictly adheres to CCPA and GDPR standards. They conduct regular internal audits and provide public updates on any changes that impact users’ rights regarding their personal data.

When Is It Safe (and Not Safe) to Use AI Chatbots?

The safety of using AI chatbots depends on several factors, including the context, purpose, and design of the app.

Here’s when it’s generally safe to use ChatGPT and when it might not be.

Safe to Use AI Chatbots:

- Information Retrieval: AI chatbots are generally safe when used for retrieving factual information. They can quickly provide answers to questions about general knowledge, weather, news, or data from reliable sources.

- Routine Tasks: Using chatbots for routine, repetitive tasks like setting reminders, ordering products, or making reservations can be safe and convenient.

- Customer Support: Many businesses use AI chatbots for customer support inquiries, such as checking order status or providing basic troubleshooting. These can be safe as long as they are programmed to handle inquiries appropriately.

- Entertainment: Chatbots designed for entertainment, like virtual assistants or games, are typically safe for leisure and amusement.

Not Always Safe to Use AI Chatbots:

- Sensitive Information: Avoid using AI chatbots to share sensitive personal information like social security numbers, credit card details, or passwords. They may not always guarantee data security.

- Medical or Legal Advice: While AI chatbots can provide general health or legal information, they should not be relied upon for medical diagnoses, treatment recommendations, or legal advice. You should always consult professionals for life and health matters.

- Critical Decision-Making: Avoid using AI chatbots for critical decisions, especially in situations that require ethical or moral judgment. They lack human empathy and might not understand the nuances of complex situations.

- Inappropriate Content: Some chatbots can generate inappropriate or offensive content, so it’s not safe to use them in settings where such content is unacceptable, like professional or educational environments.

- Emotional Support: While AI chatbots can provide basic emotional support through scripted responses, they should not be used as a substitute for mental health professionals in cases of severe emotional distress or mental health issues.

- Security Risks: Poorly designed or unsecured chatbots can be vulnerable to hacking or exploitation. It’s essential to choose reputable and secure chatbot platforms.

- Language Limitations: AI chatbots may struggle with understanding or generating content in languages they’re not trained for, leading to miscommunication.

AI chatbots can be safe when used within their intended scope and for non-sensitive, routine tasks. However, they may not always be suitable for handling sensitive or critical matters. Always exercise caution, use your judgment, and be aware of the limitations of AI chatbots in various contexts.

Security Measures in ChatGPT

In the digital world, unauthorized access is a looming threat. OpenAI has taken extensive measures to ensure that the ChatGPT app and ChatGPT API do not become easy hacking tools. Let’s delve into the security measures they have implemented to safeguard your interactions.

The first line of defense is user authentication systems. These systems check that you are a legitimate user each time you sign in or use the API, making sure only approved users can access your account.

Password encryption is another crucial security feature. It protects your password by storing it as encrypted text, making it unreadable even if someone manages to bypass other security measures.

When data is transmitted from one place to another, SSL/TLS encryption comes into play. This encryption technology ensures that your information remains private while it travels between devices and servers. It acts as an invisible shield, making it extremely challenging for hackers to intercept and access the data during transmission.

Regular system audits conducted by cybersecurity experts at OpenAI also play a vital role in maintaining the security of ChatGPT. These audits function like detectives, constantly searching for vulnerabilities and signs of intrusion attempts. By staying one step ahead of potential hackers, OpenAI ensures that unauthorized access is prevented.

In addition, OpenAI regularly releases updates to promptly address any identified issues. This commitment to continuous improvement ensures high safety standards across all platforms.

Whether you’re using the ChatGPT app or engaging with millions of others who enjoy daily conversations with this AI model, you can trust the security measures implemented by OpenAI.

Three key elements to keeping data safe, from Tech Target

In addition to OpenAI’s policies, here are some safety measures most AI companies enact to counteract potential threats:

- Maintaining regular software updates and stringent access controls helps prevent unauthorized intrusion.

- Educating users about safe interactions with AIs ensures that they do not unknowingly disclose sensitive personal information through conversational exchanges.

- Organizations must ensure adherence to strict data protection laws, storing user information securely while maintaining transparency regarding its usage.

To gain further knowledge on the ever-changing landscape of artificial intelligence, particularly related to automated conversation bots, click here for OpenAI’s official FAQ page.

Guarding Your Privacy with ChatGPT: The Lowdown

When you open an OpenAI account and sign up for ChatGPT, you will be required to submit your email address and phone number. But is it safe to do so?

The question of whether sharing personal data like your email address or phone number online boils down to one thing: trust in the company’s privacy policy. In this case, we’re talking about OpenAI.

You see, OpenAI has built its reputation on solid ground rules around user data protection. The company has taken steps to protect against any unauthorized access or disclosure of confidential data.

While these policies are robust and designed for maximum security, there are instances where they may share some details with third parties — but only under certain circumstances such as legal requirements or operational needs.

How to Keep Your ChatGPT Account Safe

The first line of defense for any online account is a strong password. Although OpenAI does not currently offer two-factor authentication (2FA) for Chat GPT, you can still bolster security by using robust passwords.

Another crucial aspect of maintaining safety while interacting with chatbots like ChatGPT involves keeping personal information private. Remember that even though these bots are designed to simulate human conversation, they should not be privy to sensitive data about you or anyone else.

Bear in mind that all conversations with ChatGPT are accessible by OpenAI and may be used as training data for the bot’s learning process. In essence, this means your interactions could potentially appear in other users’ prompt results — another reason why it’s essential never to share personal details during these exchanges.

In order to ensure top-notch safety while using ChatGPT, familiarizing yourself with OpenAI’s privacy policy would be a smart move.

Beyond understanding the ins and outs of these guidelines though, you should make sure connections are secure when accessing platforms like these and keep tight-lipped on unnecessary personal info during interactions within the AI environment itself.

My two cents? Anonymize your profile.

This means giving out just enough detail required for registration processes (like providing a generic name), without revealing too much of your identifiable specifics.

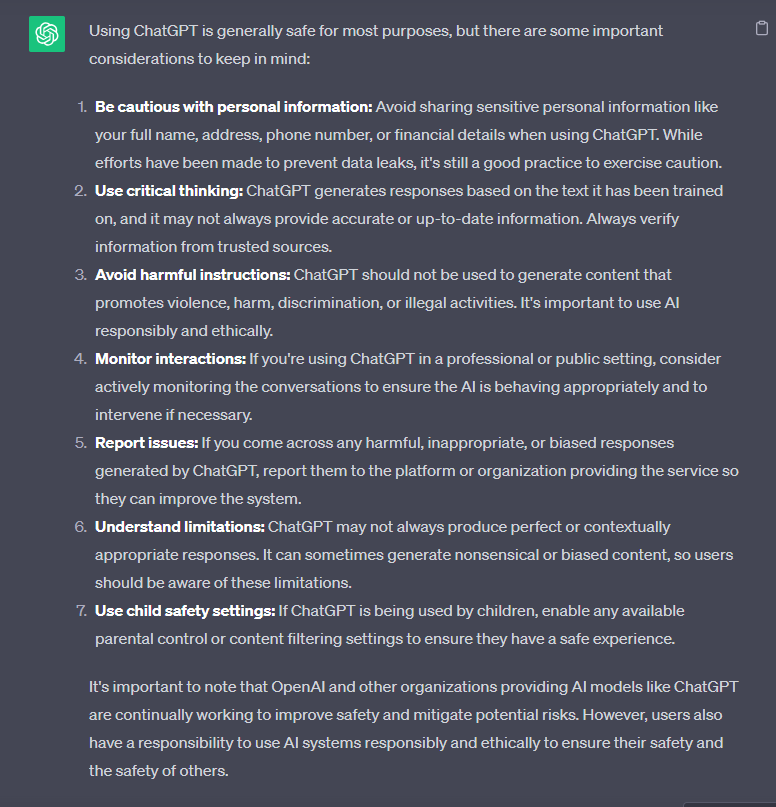

We asked ChatGPT if it is safe to use — this is how the chatbot responded to the prompt “Is it safe to use ChatGPT?“

Using ChatGPT Ethically

Despite the potential benefits of using AI language models like ChatGPT, there are also drawbacks associated with their use.

We have three main concerns when it comes to these AI systems: offensive content, plagiarism, and user trust.

Offensive Content

The big concern here is offensive content. Nobody wants their interaction with an AI model tainted by inappropriate or harmful text, right? That is why OpenAI has implemented safeguards against such output.

While no system can be 100% perfect, ChatGPT does its best. And if anything slips through the cracks, users are encouraged to report it promptly.

Plagiarism

Another concern is plagiarism. Generative AIs like ChatGPT have the potential to copy text from existing works. While it’s designed to produce original answers and content, that doesn’t mean that plagiarism isn’t possible.

User Trust

Last but certainly not least is user trust. The idea is not just about avoiding offensive content or maintaining originality; it is also about transparency and accountability. Critics argue that advanced AIs might deceive users into thinking they are chatting with real people instead of machine learning algorithms.

To proactively address this issue, clear labeling practices indicate whether something is human-operated or powered by artificial intelligence.

The goal here is to build a trusted environment for everyone involved — developers creating applications based on GPT technology as well as end-users benefiting from them.

OpenAI’s Safety and Security Strategy: Slow and Steady

They aren’t just toying with AI — OpenAI is taking it seriously. They are fully committed to ensuring the utmost security of their official app.

Let’s delve into how they achieve this:

First, OpenAI takes a slow and steady approach to development. In an industry where speed often takes precedence over quality, OpenAI prioritizes thorough testing and scrutiny for each feature before its release. This meticulous process ensures both the functionality and security of the app.

Second, OpenAI’s research team works tirelessly to promptly address any bugs or vulnerabilities. They maintain a high level of application performance without compromising user experience or security measures.

In a world that thrives on instant gratification, OpenAI’s deliberate and cautious approach may seem counterintuitive. However, when dealing with the complexity of AI technology, rushing can lead to errors and mishaps.

OpenAI prioritizes catching potential issues before they become significant problems, ensuring that users receive only top-notch features from their platform.

No-Nonsense Approach Against Violations

At OpenAI, rules are strictly enforced with no exceptions. Any violation of their terms of service is met with strict enforcement. OpenAI takes a strong stance against any malicious activities that threaten the integrity of its system or compromise data privacy.

Their policy details transparently outline the consequences of breaching these regulations.

At OpenAI, safety and security are not just buzzwords; they are core values deeply ingrained in every aspect of their operation. This commitment sets them apart in the ever-evolving landscape of artificial intelligence.

Frequently Asked Questions

Is ChatGPT Safe?

Yes, ChatGPT is safe to use. The AI chatbot and its generative pre-trained transformer (GPT) architecture were created by Open AI to safely produce natural language responses and high-quality content in a manner that resembles human speech.

However, users should be cautious of potential risks such as phishing scams and unauthorized access.

Does ChatGPT collect data?

Yes, OpenAI collects user interactions with ChatGPT for training purposes but commits to privacy measures that align with regulations like CCPA and GDPR.

In addition to saving your chat history, ChatGPT also gathers information regarding your location, time zone, dates of access, device type, internet connection, and other relevant details. It is important to note that this data can potentially be used to personally identify you.

What are the concerns of ChatGPT?

Implementing AI chatbots such as ChatGPT within a business setting can introduce various risks pertaining to security, potential data breaches, maintaining confidentiality, assuming liability, protecting intellectual property rights, ensuring compliance with regulations, encountering limitations in AI development, and safeguarding privacy.

For end users, among the main concerns are data privacy, AI-powered cybercrime, biased content generation, and possible misuse in spreading misinformation or offensive content.