Meta, the company behind Facebook and Instagram, has tossed its hat into the ring with the brand new Meta AI app.

This isn’t just another chatbot clone aiming to replicate existing tools. Meta is adding its own signature social spin to AI interactions, integrating features you might expect from Meta Platforms. You might be wondering what makes the Meta AI app different and if it’s something you should pay attention to for personal or professional use; let’s explore meta ai and what this application brings to the table.

Table of Contents:

- What Exactly is the New Meta AI App?

- The Social Twist: Meet the Discover Feed

- Talking with Meta AI: Voice Modes Explained

- How the Meta AI App Gets Personal

- Beyond the App: Where Else You Find Meta AI

- A Smart Move: Merging with the Ray-Ban App

- Why This Matters for Students and Professionals

- What About Privacy and Data?

- The Bigger Picture: AI Assistants Get Social

- Conclusion

What Exactly is the New Meta AI App?

Think of the Meta AI app as Meta’s direct response to tools like ChatGPT or Google Gemini. It’s a dedicated space, a standalone mobile app, where you can interact directly with Meta’s artificial intelligence. You can download it for your phone, as it’s available on both iOS and Android operating systems in select countries.

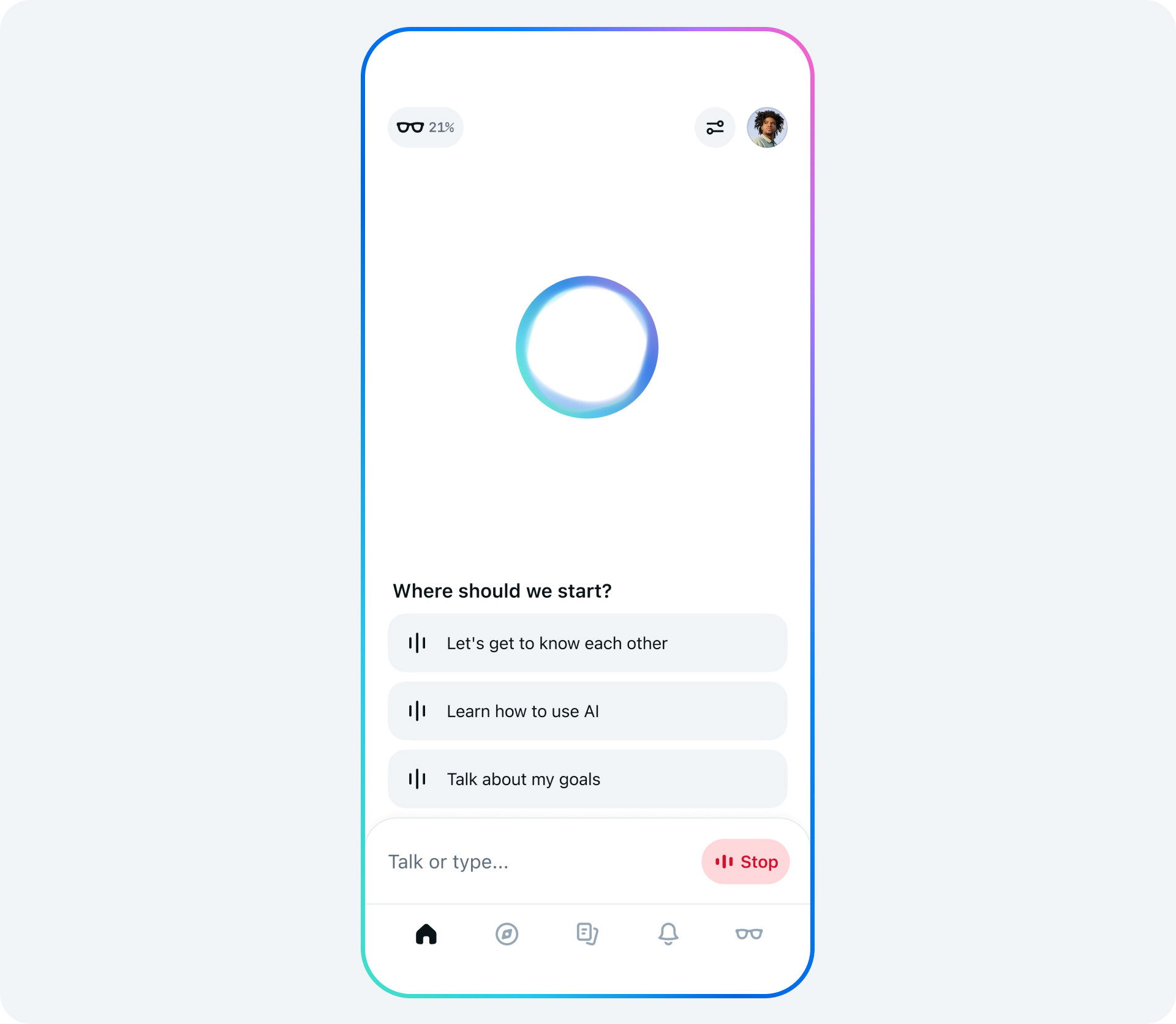

What can it do? The core functions are similar to what you’d expect from a modern personal AI helper. You can type out questions or choose to start talking to it using your voice, making interaction flexible.

The meta ai app can generate images based on text descriptions you provide and fetch real-time information from the web. This is possible due to integrations with search engines like Google and Bing. This access means you’re not limited to information from its training data; you can get current results and the latest news too.

Underpinning these capabilities are powerful foundational models developed by Meta. These models allow the ai app to understand requests, generate text, and create images effectively. The goal is to offer a versatile and helpful AI assistant.

The Social Twist: Meet the Discover Feed

Here’s where the Meta AI app adds a distinct “Meta” touch. The application features a ‘Discover’ feed. This feed introduces a social media element directly into the AI experience.

Instead of keeping your chats completely private, you can browse interactions that other users have chosen to share publicly within the discover feed. People, potentially including friends from your existing Meta networks, can post specific prompts and the AI’s responses they found interesting, funny, or helpful.

You can then engage with these shared posts much like on other social platforms. Like them, leave comments, share them further, or even “remix” them. Remixing allows you to take someone else’s shared prompt as a jumping-off point for your own unique conversation with Meta AI, letting you explore meta ai collaboratively.

Meta’s VP of Product, Connor Hayes, highlighted that the goal here is to demonstrate the possibilities of AI and make the technology feel less intimidating or abstract. By seeing concrete examples, users can get ideas for their own interactions. This feature allows users to experience meta ai in a shared context.

This social angle might seem logical coming from a social media giant like Meta. However, they aren’t alone in exploring the intersection of AI and social interaction. Elon Musk’s X platform integrates its Grok AI, and reports suggest OpenAI is developing features to make ChatGPT interactions more shareable. It signals a broader trend where AI is becoming more integrated into social ecosystems.

Talking with Meta AI: Voice Modes Explained

Voice interaction is a significant focus for the Meta AI app, encouraging users to simply start talking. You can converse with the AI naturally, similar to how you might interact with Siri or Google Assistant. But Meta is also testing more sophisticated voice capabilities.

An opt-in beta version of the voice mode is available, offering a glimpse into more advanced conversational AI. This mode tries to achieve a much more fluid, back-and-forth conversation, perhaps closer to the advanced voice features demonstrated by OpenAI for ChatGPT. The aim is to move beyond simple turn-based question-and-answer exchanges to something feeling more like a genuine chat; this makes the ai app talk feel more natural.

This enhanced voice experience leverages Meta’s research into “full-duplex” AI models. Their published research details efforts to create AI capable of handling “rich synchronicity.” This translates to faster response times, the ability for both user and AI to interrupt or speak simultaneously naturally, and the inclusion of subtle cues like “uh-huh” that indicate active listening (known as backchanneling).

Early demonstrations suggest this experimental full-duplex mode possesses noticeably more personality than standard voice assistants. Currently, the standard voice mode can readily access web information, while the beta full-duplex mode might have limitations in this area initially. Both voice options began rolling out progressively in the US, Canada, Australia, and New Zealand around April 2024.

How the Meta AI App Gets Personal

Meta aims for its ai assistant to feel highly relevant and helpful to each user. In the US and Canada, the Meta AI app can optionally use information derived from your activity on Facebook and Instagram to provide personalized responses. The concept is that your interactions on these platforms offer signals about your interests, helping the AI better anticipate what you might be looking for when you interact with it.

This personalization isn’t purely passive, however. Similar to memory features found in other advanced AI like ChatGPT, you can explicitly instruct Meta AI. You can tell it to remember specific preferences, hobbies, professional interests, or any details you want it to retain for future conversations, helping you experience personal ai.

You have control over this memory feature, allowing you to manage what the AI remembers or forgets. This allows you to shape the AI’s understanding over time. The process is ai designed to make interactions smoother and more context-aware.

Technically, the app operates on a version of Meta’s own powerful large language model, Llama 3. This model has been specifically tuned for assistant-like interactions. This sophisticated large language model is the core engine powering its conversational abilities, understanding of context, and generation capabilities; it represents some of the latest ai from Meta.

Beyond the App: Where Else You Find Meta AI

While the standalone Meta AI app is the newest way to interact, you might have already encountered Meta AI elsewhere. Meta has been progressively integrating its AI features directly into its existing, widely used platforms. You can often find Meta AI access points within the search bars of Instagram, Facebook, and WhatsApp, making it easy to access meta ai.

Interestingly, Meta anticipates that the majority of user interactions with its AI will continue to happen through these integrated points rather than solely via the dedicated mobile app. Connor Hayes indicated that interaction via these existing platforms has already reached a massive scale, touching nearly a billion users in some capacity. He did concede, however, that a standalone app often provides the most direct and focused way to chat with an ai assistant.

So, the app offers a concentrated, feature-rich experience, potentially including tools found in the conceptual ai studio. But the AI’s presence is much broader, woven into the fabric of Meta’s ecosystem, including desktop web access in some forms. This strategy aims to make the latest AI readily available wherever users already spend their digital time, encouraging people to explore meta ai features easily.

A Smart Move: Merging with the Ray-Ban App

The arrival of the Meta AI app involved an interesting strategic move. It wasn’t released as an entirely new download requiring users to seek it out independently. Instead, it effectively replaced an existing application: the “View” companion app previously used for managing the Meta Ray-Ban smart glasses (including earlier Ray-Ban Stories models).

If you own these ai glasses, there’s no need for concern about losing functionality. The new Meta AI app incorporates a dedicated ‘Devices’ tab. This section preserves all the previous capabilities for managing your Ray-Ban Meta glasses, such as accessing the photo and video gallery where your captured media media resides, checking battery status, and adjusting settings for paired devices.

Why merge the view app and the new AI assistant? Meta views its progress in AI as intrinsically linked to both software and hardware development. The ray-ban smart glasses are a key component of this long-term vision, representing a way to interact with AI beyond screens. These glasses already utilize AI for features like identifying objects in your field of view (“See what I see”) and recently added capabilities for real-time language translation, enhancing the glasses experience.

Consolidating the apps reinforces the connection between the conversational AI assistant and Meta’s hardware ambitions, particularly with ray-ban smart devices. Meta is reportedly planning future iterations of smart glasses, potentially incorporating advanced features like a small heads-up display. This merge streamlines the user experience for those invested in Meta’s hardware and software ecosystem, ensuring media can automatically transfer and be managed alongside AI interactions.

Why This Matters for Students and Professionals

Okay, Meta has launched a new AI app integrating social elements. Why should this matter to you, whether you’re navigating academic life or your professional career? There are several potential advantages and applications worth considering.

For students, the Meta AI app could serve as a helpful study partner. Need a complex concept explained more simply? Ask the AI for a breakdown. Feeling stuck on a writing assignment? Use it to brainstorm ideas, create outlines, or even draft initial paragraphs (always remembering ethical use, proper citation, and avoiding plagiarism.). It could also assist in summarizing lengthy research papers or articles, helping you stay informed and saving valuable study time through meta ai learn.

Professionals can also find numerous uses for this ai assistant. Drafting emails, generating summaries of reports, or quickly recapping meeting notes could become more efficient. If you need creative sparks for a marketing campaign, presentation content, or problem-solving, the AI can act as a brainstorming collaborator. Developers might even use it for assistance with code snippets or debugging, depending on the specific coding strengths of the underlying Llama 3 model.

The integrated image generation feature offers further utility. Students might create custom visuals for presentations or projects. Professionals could use it for concept mockups, unique social media content, or visualizing ideas discussed in meetings. Having this creative tool within the same assistant interface adds convenience to the main content generation workflow.

Furthermore, the ‘Discover’ feed presents a learning opportunity. Observing how others formulate effective prompts for study tasks, professional writing, or creative projects could inspire your own usage. You might discover novel ways to leverage AI for productivity or learning that you hadn’t considered, making the process of ai explore more collaborative. You can find various resources demos implicitly through user shares.

What About Privacy and Data?

Whenever personalization drawing from social media activity is involved, questions about privacy naturally arise. It’s understandable to wonder precisely how your data from Facebook and Instagram might be used by the Meta AI app. Meta asserts that this linkage helps make the AI more relevant and useful for your specific needs and interests.

You are given certain controls over this process. Meta typically provides settings dashboards across its platforms to manage data usage and permissions. Within the Meta AI app, you can likely manage the AI’s memory feature and potentially influence the degree of personalization, although the exact controls might differ based on your region and are subject to change over time. Reading the app’s specific privacy settings and Meta’s general privacy policy is always recommended.

It’s important to remember how large AI models like Llama 3 are trained. They learn from vast datasets, typically encompassing publicly accessible web information and licensed data collections. While your direct interactions help refine the AI’s responses specifically for you (experience personal ai), the fundamental training relies on broader information sources, often detailed in infrastructure resources information.

Transparency regarding data usage remains a critical aspect of deploying AI tools responsibly. As these assistants become more deeply integrated into daily digital life, understanding how personal information is collected, processed, and protected is essential. Always take the time to review the available settings and policy documents to stay informed about your data.

The Bigger Picture: AI Assistants Get Social

The introduction of the Meta AI app underscores a significant trend in artificial intelligence: AI is becoming increasingly social and deeply integrated into existing digital platforms. It’s evolving beyond simple, isolated question-and-answer bots. We are now seeing AI capabilities woven directly into social media feeds, messaging applications, and even wearable hardware like the Ray-Ban Meta glasses.

This convergence of AI and social interaction offers potential benefits. Discovering AI creations from others can spark inspiration and creativity. Shared prompts might provide useful templates or starting points for complex tasks. A sense of community could potentially develop around the innovative and productive use of AI tools.

However, this trend also brings important considerations. How will the quality and reliability of shared AI-generated content be assessed or moderated? Could socially amplified AI interactions inadvertently create echo chambers or accelerate the spread of misinformation? Finding the right balance between fostering the benefits of social connection and upholding standards of accuracy, safety, and user privacy will be an ongoing challenge for platforms like Meta Platforms.

Meta’s strategy with the Discover feed represents a notable experiment in this area. It seeks to leverage the company’s established strengths in social networking to make sophisticated AI feel more approachable, collaborative, and engaging. How effectively this resonates with users and shapes the future development of conversational AI interfaces will be fascinating to observe, potentially influencing everything from user experience to opportunities for meta people careers in AI development. Keeping up with the news blog newsletter from Meta might offer insights.

Conclusion

The new Meta AI app marks a significant step for Meta into the competitive field of generative AI assistants. It delivers core AI functionalities, including conversational chat, image generation, and access to real-time information via web search. Its standout characteristic, however, is the ‘Discover’ feed, which introduces a social layer to AI interaction, enabling users to share, view, and remix AI prompts and outputs.

Coupled with advancements in conversational voice modes, personalization features that can draw context from Facebook and Instagram activity, and strategic integration with hardware like the Ray-Ban smart glasses, the Meta AI app aspires to be a versatile and deeply connected personal AI. For students and professionals looking to enhance productivity, learning, or creativity, it presents a distinct set of ai features and tools within the expanding AI landscape. As artificial intelligence continues its rapid development, Meta’s socially integrated approach, part of its broader meta ai explore meta strategy, is definitely one to watch.

Leave a Reply